Daily exercise is moral

Daily exercise is moral. There I said it. This is an unusual sentence but one that is logically correct and actually also more truthful and honest than any other statement about exercise.

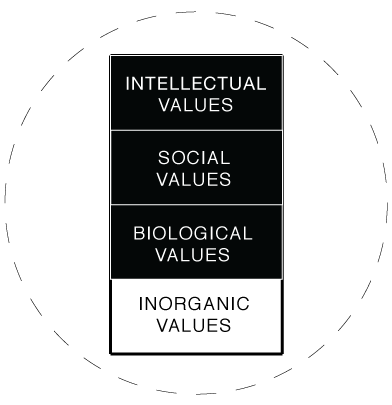

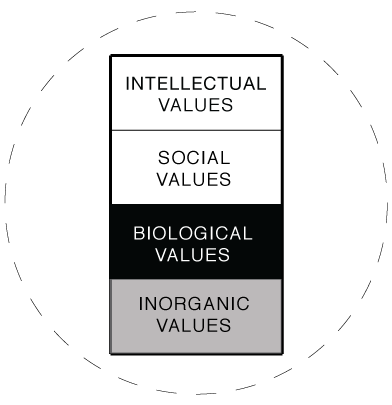

It's a sentence that I can only say because I'm using a metaphysics that is built for this 21st Century. A metaphysics that allows us to move beyond all traditional interpretations of morality and place even everyday activities into a moral context. A moral context that isn't just logically true for me in my place and time - like our current morality would say, but it's true and moral for all people, everywhere.

I can say this because I can philosophically and logically justify the sentence using the Metaphysics of Quality (MOQ). Because unlike Zazen as described in an earlier post, exercise, as static quality; does have clear qualitative justifications for why its a good thing. In fact, whilst it is fundamentally a biological activity - it’s actually something that’s good on every level and this is really why it’s so moral to do every day.

For example, intellectually science is increasingly demonstrating the benefits of regular exercise. It has been shown to benefit the health of both body and mind in many ways. But the biological effects of feeling good as well as the social benefits of looking good are both empirically verifiable and legitimate as well.

And as an aside - this is also another reason why the celebrities I wrote about in my previous post are so good. They show that you can do professional level exercise and yet maintain a moral vegan diet at the same time - both things supported by the MOQ.

Personally I’ve recently been spending up to two hours a day at the gym doing various cardio and strength based exercises. But for someone who has limited free time for exercise, studies are increasingly showing that High Intensity Interval Training (HIIT) is an effective way of getting fit and healthy in an extremely time limited way.

Along these lines please enjoy a video above showing a daily HIIT routine that basically anyone can do so long as they are honestly pushing themselves through.